Microsoft Azure Tool Stack for Data & Analytics

There are options! For example, I just stood up an Azure for Data & Analytics solution that has no logic apps, Azure function, event hub, blob storage, databricks, HDI, or data lake. The solution is not event-driven and takes an ELT (extract, load, and then transform) approach. It reads from sources via Azure Data Factory and writes to an Azure Database logging the ELT activities in an Azure Database as well. Now, how simple is that? Kindly, you don’t have to build the Taj MaSolution to be successful. You do have to fully understand your customer’s reporting and analysis requirements, and who will be maintaining the solution on a long-term basis.

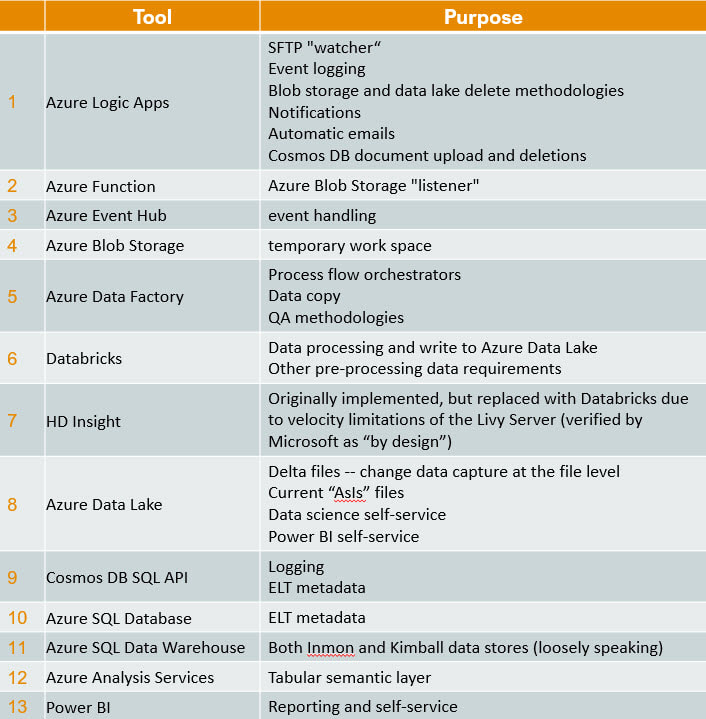

If you still wish to swan dive into the pool, here’s the list!

Technical Critical Success Points (CSP)

Every single line item above has CSPs. How long do you want to hang out with me reading? I’m with you! Consequently, here are my top three CSP areas.

Azure Data Factory (ADF)

- Parameterize the living bejeebers out of everything. Assuming an ELT methodology, have one dataset for every source, one dataset for every destination, and one pipeline for every destination. Even better, have one linked service for each type (SQL Server, SFTP, Cosmos DB etc.) Reality check: this is actually impossible as not everything in ADF is parameterized, but get as close as you can get!!

- Understand volume (file size and number) vs velocity (frequency and speed) of your files. This will help you make the ADF Data Flow vs Databricks vs HDI decision.

- Incorporate logging right at the start. Log every pipeline start, success, and failure. Also log every success or failure data copy. I’ve gone overboard on this and logged the success or failure of every lookup and stored procedure activity. I encourage you to find your own balance.

- Use ADF for your data lake data validation QA process. Minimally, let’s assume your are verifying source-to-target row counts after every ingestion. Put this in an ADF pipeline.

- Use ADF for orchestration, but don’t smash everything into one pipeline — there is a 40 activity limitation which you will quickly exceed especially with a good logging methodology. It also becomes unmanageable to troubleshot multi-process pipelines. Have a grandparent orchestrator (like a SSIS master package) which calls subject or process area orchestrators, which in turn call copy pipelines. Your DevOps team will thank you profusely!

- Use a Cosmos DB document, SQL Server table or TXT file for environment properties. Remember environments in SSIS? It is pretty much the same idea. There should be no hard-coded URLs, blob storage or data lake locations, SQL data base names etc. in ADL parameters. Any parameter value that changes between DEV, STG, UAT and PRD should be in the environment lookup activity. There should be no global find and replace ever needed at time of deployment. I’ve attached a sample environment properties JSON document at the bottom of this post.

Azure Data Lake (ADL)

- Plan your security methodology before you load your first file. Map it out in Excel or tool of choice. Data lake security cannot be an afterthought. If you skip this, there is a high probability that all your pipelines will need rework.

- Don’t leave a bunch of junk in your trunk! Clean up. Tomorrow never comes — oddly, it is always tomorrow.

- Assuming your data lake represents your persisted storage for reporting, use Azure Blog Storage (ABS) for temporary staging and pre-processing. This keeps your ingestion work area separate from your long-term storage. ABS is cheap and a lifecycle management policy will automatically clean up temporary work areas.

- If at all possible, divide your data lake into two areas: deltas and current. For each file ingestion, deltas hold one file for adds, one file for updates and one file for deletes. This is like file-level change data capture. “Current” is what the source system looks like right now. There is no history in “current”, but “current” is created by summing up all of the deltas. This allows you then to only have one QA process on current as there is an ingestion dependency.

- Use parquet files for data scientists as they will know how to use them and be wanting point-in-time analysis (deltas). Provide *.CSV (current snapshot) files for non-technical users. Be honest with me here, have you ever known a non-technical self-service user to NOT want to open a data lake file in Excel? Point made.

Data Driven Ingestion Methodology

- A data-driven ingestion methodology derives all of its source, destination, transform, and load information from a file, table or document. (If you have worked with BIML for SSIS, this is a very similar concept.) The properties in the metadata object become ADF parameter values, are used by Databricks or HDI for file ingestion, and are used to create correct data types in SQL Server, just to name a few uses. Metadata runs the whole show. I have attached a sample file metadata JSON document at the bottom of this post.

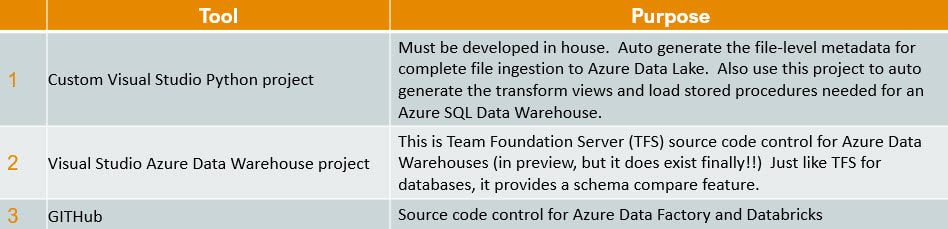

- Auto generate the metadata. Either some poor soul has to type all of this out, or a Visual Studio Python project can read each source file and spill out the metadata needed for each environment. The file metadata document I’ve supplied below was auto generated…along with hundreds of others. Can you even imagine creating this by hand when one source table has over a hundred columns?

- If your transform and load has followed a repeatable process, you can also auto generate the transform views and load stored procedures needed by an Azure SQL Data Warehouse (ADW) where CTAS() is your bff (best friend forever). This will require an extremely accurate source-to-target mapping document. I would consider the auto-gen process successful if it correctly turns out 90% of what is needed for an ADW load.

Wrapping It Up

Supporting Applications

It is only honest to share three supporting applications that help to make all of this possible.

Link to creating a Python project in Visual Studio.

Link to Azure SQL Data Warehouse Data Tools (Schema Compare) preview information. At the writing of this post, it still has to be requested from Microsoft and direct feedback to Microsoft is expected.

Link to integrate Azure Data Factory with GitHub.

Link to integrate Databricks with GitHub.

Sample Files

Below are example of data driven metadata and environment properties. Both are in JSON format.

environmentproperties.json

environmentproperties.jsonDownload File

data_driven_metadata.json

data_driven_metadata.jsonDownload File