This revamped curriculum outlines the key areas of focus and estimated timeframes for mastering data engineering skills.

Foundational Knowledge (1-3 weeks)

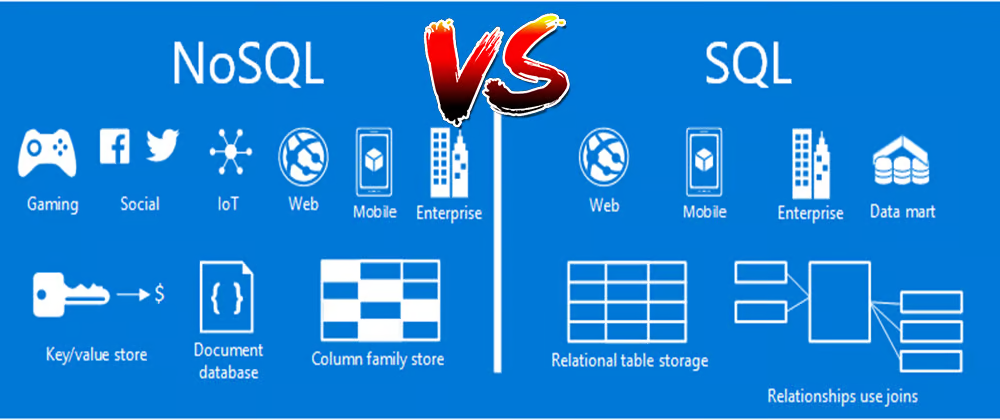

- Databases (SQL & NoSQL): Understand the fundamentals of relational and non-relational databases for structured and unstructured data management.

- Distributed Computing: Grasp the principles of distributed systems to design scalable and fault-tolerant data processing architectures.

Data Modeling (2-4 weeks)

- Data Modeling Techniques: Explore various modeling approaches like relational and dimensional to structure data efficiently for analytics.

- Normalization & Denormalization: Learn how to optimize database design for minimizing redundancy and maximizing query performance.

- Schema Design: Understand considerations for designing schemas that facilitate efficient data storage, retrieval, and analysis across diverse use cases.

Data Storage (3-5 weeks)

- Database Types (SQL & NoSQL): Gain proficiency in selecting the appropriate database technology based on data characteristics and access patterns.

- Database Selection: Master the process of choosing the right database for specific use cases, ensuring optimal scalability, performance, and reliability.

- Database Administration & Optimization: Develop expertise in managing and fine-tuning database performance through indexing, query optimization, and resource allocation strategies.

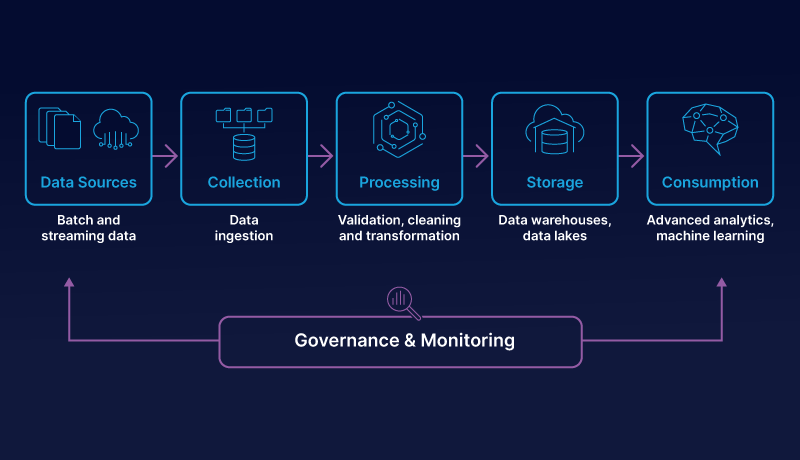

Data Processing (2-4 weeks)

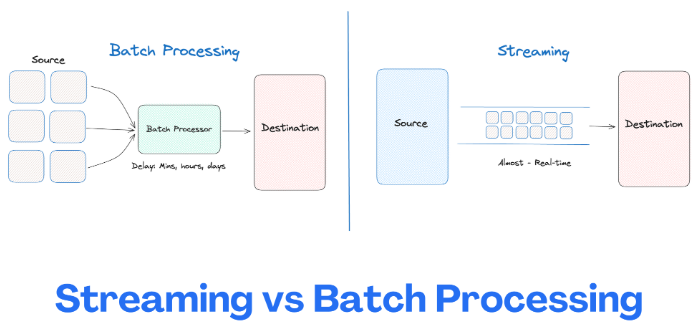

- Batch Processing Frameworks: Explore frameworks like Apache Spark and Hadoop MapReduce for efficient batch processing of large datasets.

- Stream Processing Frameworks: Learn about Apache Kafka and Apache Flink for real-time data processing and analysis of continuous data streams.

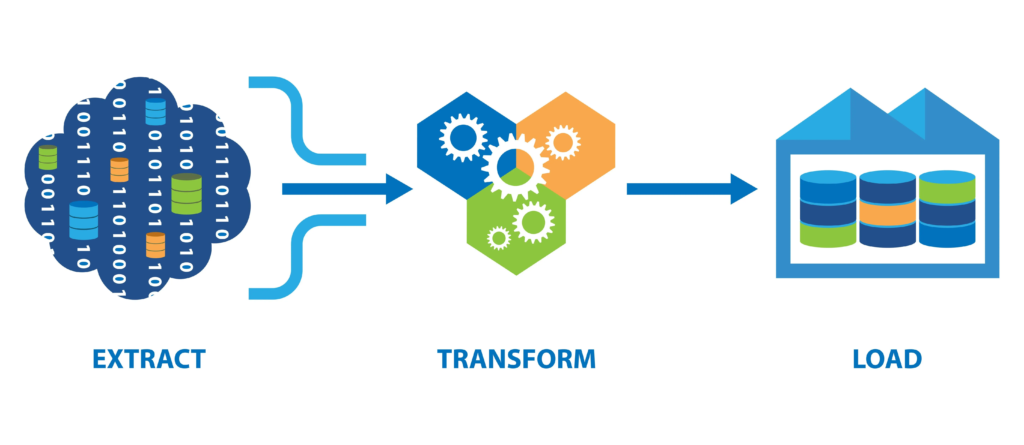

- ETL Processes & Tools: Understand how to extract, transform, and load data using ETL tools to ensure data quality and accessibility for analytics.

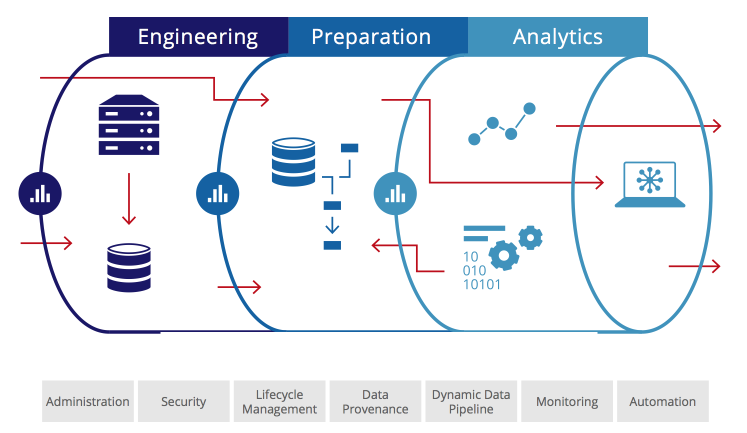

Data Integration (4-8 weeks)

- Data Ingestion: Master techniques for gathering data from various sources like databases, APIs, and files for centralized analysis.

- Data Integration Patterns: Learn about best practices for harmonizing disparate data sources to facilitate seamless data flow and interoperability.

- Data Integration & Synchronization Tools: Explore tools for automating data workflows, synchronizing data across platforms, and maintaining data consistency.

Data Transformation (4-6 weeks)

- Transformation Techniques: Become proficient in transforming data using SQL, Python, or specialized tools like Apache Beam for analysis-ready formats.

- Data Cleansing, Normalization & Enrichment: Understand processes for enhancing data quality, integrity, and usability to prepare data for accurate analysis.

- Data Pipeline Orchestration & Scheduling: Learn to automate and manage complex data workflows for timely and reliable data processing across the data infrastructure.

Data Quality & Governance (3-5 weeks)

- Data Quality Metrics & Monitoring: Understand how to assess and maintain data accuracy, completeness, and consistency for reliable decision-making.

- Data Governance Principles & Frameworks: Learn about establishing policies and processes for effective data asset management, compliance, security, and accountability.

- Data Quality Checks & Validation: Implement automated processes to detect and resolve data anomalies and inconsistencies, ensuring high-quality data for analytics.

Cloud Technologies (7-9 weeks)

- Cloud Platforms (AWS, Azure, GCP): Gain proficiency in leveraging cloud platforms for scalable data storage, processing, and analysis of large datasets.

- Cloud-Based Data Services: Learn about cloud storage and processing services for efficient data management and analytics workflows.

- Cloud Security & Compliance: Understand how to implement robust security measures to ensure data confidentiality, integrity, and availability while adhering to regulations.

Big Data Technologies (6-8 weeks)

- Distributed Storage Systems: Explore systems like Hadoop HDFS and Amazon S3 for storing and managing massive datasets in distributed environments.

- Distributed Computing Frameworks: Gain expertise in frameworks like Apache Spark and Apache Flink for parallel processing and analysis of large datasets.

- Containerization & Orchestration: Understand containerization (Docker) and orchestration (Kubernetes) technologies for packaging and deploying data-driven applications consistently across diverse computing environments.

Data Visualization & Reporting (2-5 weeks)

- Data Visualization Tools: Explore tools like Tableau and Power BI for transforming complex data into insightful visualizations for effective communication.

- Dashboard Design & Storytelling: Learn how to create compelling dashboards that effectively convey key insights and trends to stakeholders.

- Interactive Visualizations & Reports: Develop expertise in creating dynamic and user-friendly data products for enhanced engagement and understanding of data-driven insights.

Advanced Topics (4-9 weeks)

- Explore advanced areas like real-time analytics, data lakes, and graph databases to address complex data processing challenges and unlock new insights.

- Stay updated with emerging technologies and trends to leverage the latest tools and methodologies for continuous improvement in the data engineering field.

Practical Projects & Experience (5-11 weeks)

- Real-World Projects: Work on real-world data engineering projects to apply your skills in practical scenarios.

- Collaborative Projects: Collaborate with peers on open-source projects or participate in hackathons to gain experience.

- Internships/Jobs: Seek internships or data engineering job opportunities to gain practical experience and professional development.

This learning path provides a roadmap for aspiring data engineers. It outlines essential knowledge and skills in a structured format, including estimated timeframes for each section. The curriculum covers foundational topics like databases and distributed computing, as well as advanced concepts like real-time analytics and cloud technologies. Throughout the path, hands-on experience is emphasized through real-world projects, collaboration opportunities, and internships.